Ubuntu 单机配置 Hadoop 环境

创建

Hadoop用户sudo useradd -m hadoop -s /bin/bash设置

Hadoop密码sudo passwd hadoop为

Hadoop用户增加管理员权限sudo adduser hadoop sudo

安装配置

SSH1

2

3

4

5

6

7sudo apt-get install ssh

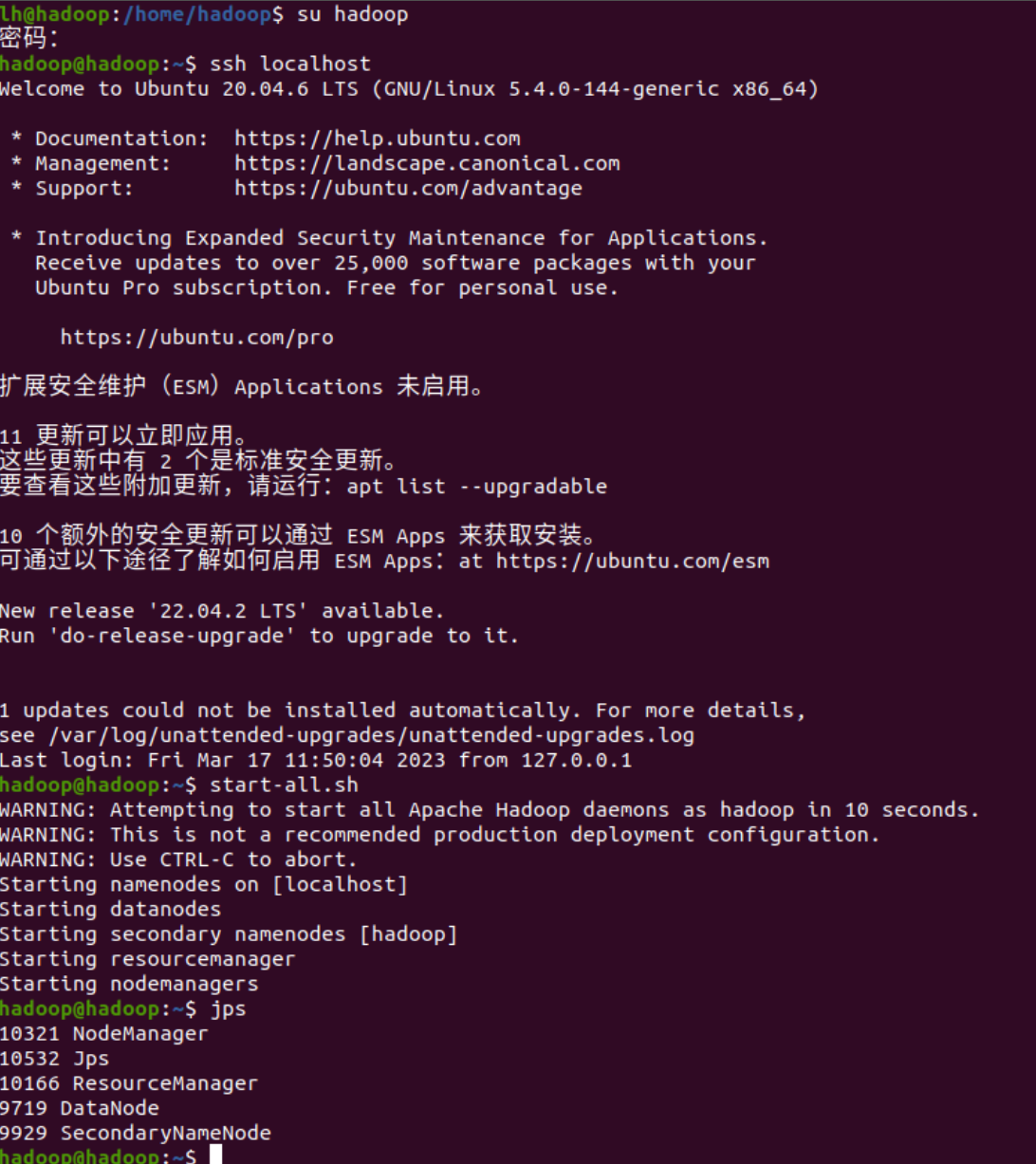

su hadoop

ssh-keygen -t rsa -P ""

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys接下来运行 ssh 命令,测试一下是否成功.

$ ssh localhost配置

java环境

1 | sudo apt-get update |

安装

hadoop- 下载 hadoop

清华镜像源:https://mirrors.tuna.tsinghua.edu.cn/apache/hadoop/common/

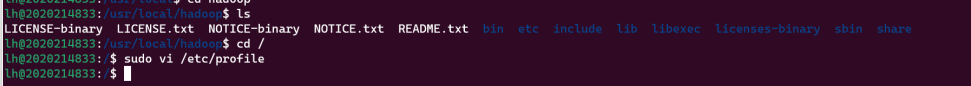

wget https://mirrors.tuna.tsinghua.edu.cn/apache/hadoop/common/hadoop-3.3.4/hadoop-3.3.4.tar.gz配置环境变量

将

Hadoop文件解压并移动到/usr/local/hadoop文件夹下1

2tar -zxvf hadoop-3.3.4.tar.gz

sudo mv hadoop-3.3.4 /usr/local/hadoop在

terminal中输入sudo vi ~/.bashrc,按 i 进入编辑模式:1

2

3

4

5

6

7

8

9export HADOOP_HOME=/usr/local/hadoop

export HADOOP_INSTALL=$HADOOP_HOME

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export YARN_HOME=$HADOOP_HOME

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/bin

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib/native"

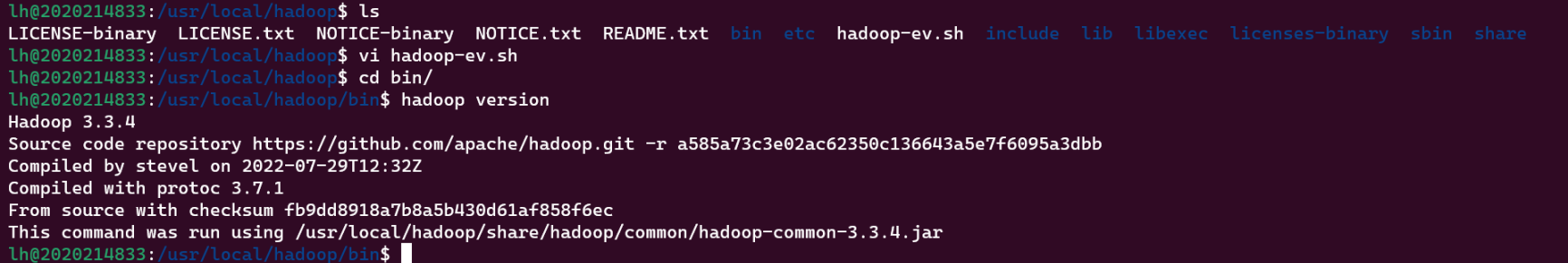

修改

Hadoop配置文件打开

/usr/local/hadoop/etc/hadoop/文件夹:配置

hadoop-env.sh文件1

2显式声明java路径

export JAVA_HOME=/usr/lib/jvm/java配置

~/.bashrc1

2

3

4

5

6

7

8

9

10

11

12#HADOOP VARIABLES START

export JAVA_HOME=/usr/lib/jvm/java

export HADOOP_INSTALL=/usr/local/hadoop

export PATH=$PATH:$HADOOP_INSTALL/bin

export PATH=$PATH:$HADOOP_INSTALL/sbin

export HADOOP_MAPRED_HOME=$HADOOP_INSTALL

export HADOOP_COMMON_HOME=$HADOOP_INSTALL

export HADOOP_HDFS_HOME=$HADOOP_INSTALL

export YARN_HOME=$HADOOP_INSTALL

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_INSTALL/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_INSTALL/lib/native"

#HADOOP VARIABLES END配置

core-site.xml文件在修改这个文件之前,我们需要使用超级用户创建一个目录,并给予

hadoop该目录的权限:1

2sudo mkdir -p /app/hadoop/tmp

sudo chown hadoop:hadoop /app/hadoop/tmp接下来切换回

hadoop用户,修改配置文件,文件路径:/usr/local/hadoop/etc/hadoop/core-site.xml,使用 VI,将配置改为:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17<configuration>

<property>

<name>hadoop.tmp.dir</name>

<value>/app/hadoop/tmp</value>

<description>A base for other temporary directories.</description>

</property>

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:54310</value>

<description>The name of the default file system. A URI whose

scheme and authority determine the FileSystem implementation. The

uri's scheme determines the config property (fs.SCHEME.impl) naming

the FileSystem implementation class. The uri's authority is used to

determine the host, port, etc. for a filesystem.</description>

</property>

</configuration>修改

mapred-site.xmlvi /usr/local/hadoop/etc/hadoop/mapred-site.xml1

2

3

4

5

6

7

8

9

10<configuration>

<property>

<name>mapred.job.tracker</name>

<value>localhost:54311</value>

<description>The host and port that the MapReduce job tracker runs

at. If "local", then jobs are run in-process as a single map

and reduce task.

</description>

</property>

</configuration>修改

hdfs-site.xml在修改之前,需要切换回超级管理员账户,创建需要用到的目录

1

2

3sudo mkdir -p /usr/local/hadoop_store/hdfs/namenode

sudo mkdir -p /usr/local/hadoop_store/hdfs/datanode

sudo chown -R hduser:hadoop /usr/local/hadoop_store然后切换回来

hadoop用户,修改配置文件:/usr/local/hadoop/etc/hadoop/hdfs-site.xml,改为:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

<description>Default block replication.

The actual number of replications can be specified when the file is created.

The default is used if replication is not specified in create time.

</description>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/usr/local/hadoop_store/hdfs/namenode</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/usr/local/hadoop_store/hdfs/datanode</value>

</property>

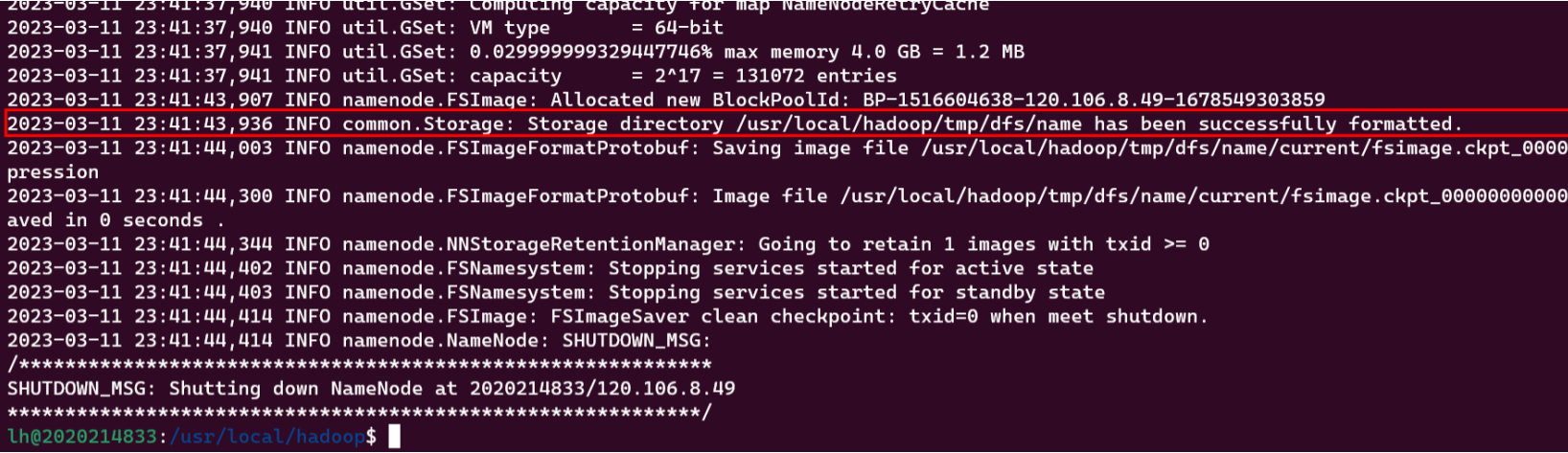

</configuration>格式化

HDFShadoop namenode –format

启动

Hadoop设置无密码登录

1

2

3

4

5

6

7ssh-keygen -t rsa

chmod 755 ~/.ssh

cd ~/.ssh

cat id_rsa.pub >> authorized_keys连接本地

ssh localhost

启动:

start-all.sh查看服务:

jps

- 标题: Ubuntu 单机配置 Hadoop 环境

- 作者: liohi

- 创建于 : 2023-04-16 16:43:37

- 更新于 : 2023-04-23 15:32:41

- 链接: https://liohi.github.io/2023/04/16/Ubuntu 单机配置 Hadoop/

- 版权声明: 本文章采用 CC BY-NC-SA 4.0 进行许可。